Bundled LiteLLM support has been deprecated from 0.2.0

LiteLLM Configuration

LiteLLM supports a variety of APIs, both OpenAI-compatible and others. To integrate a new API model, follow these instructions:

Initial Setup

To allow editing of your config.yaml file, use -v /path/to/litellm/config.yaml:/app/backend/data/litellm/config.yaml to bind-mount it with your docker run command:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data -v /path/to/litellm/config.yaml:/app/backend/data/litellm/config.yaml --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Note: config.yaml does not need to exist on the host before running for the first time.

Configuring Open WebUI

-

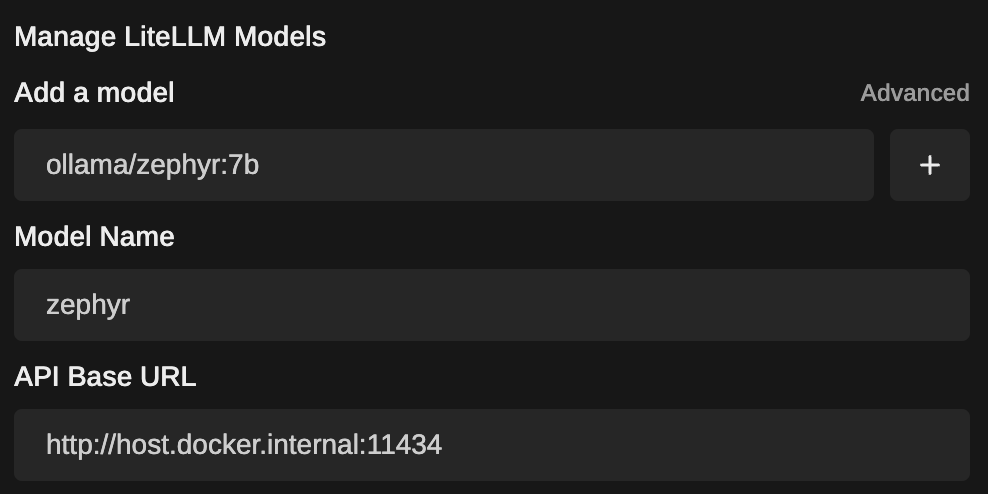

Go to the Settings > Models > Manage LiteLLM Models.

-

In 'Simple' mode, you will only see the option to enter a Model.

-

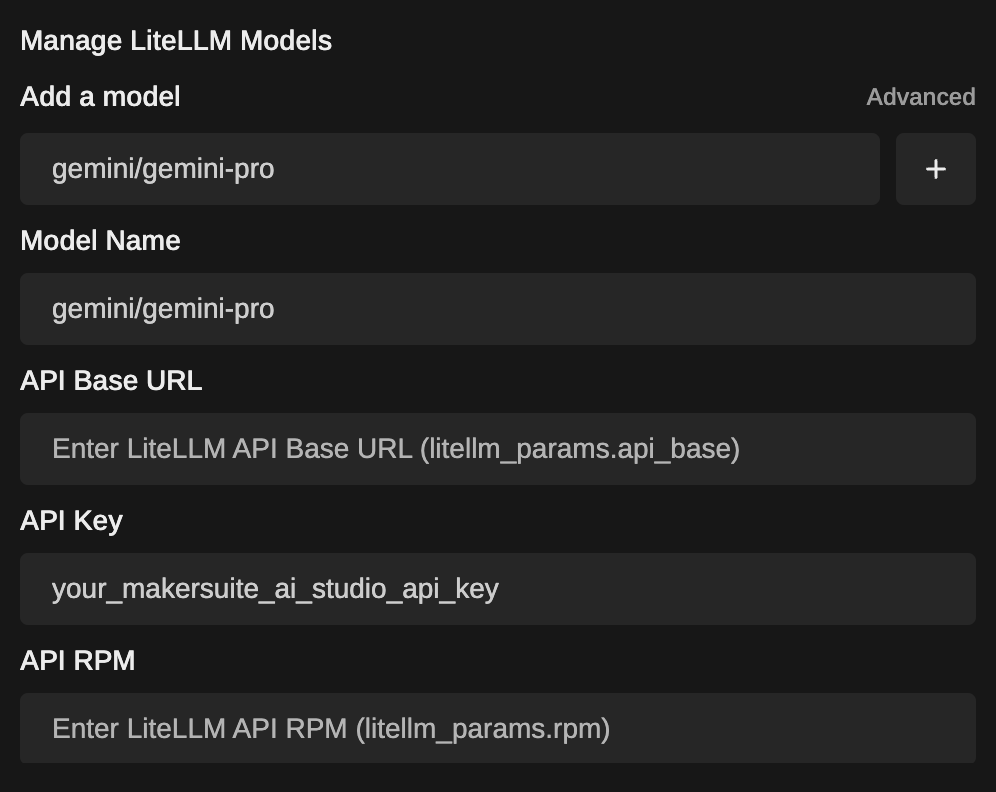

For additional configuration options, click on the 'Simple' toggle to switch to 'Advanced' mode. Here you can enter:

- Model Name: The name of the model as you want it to appear in the models list.

- API Base URL: The base URL for your API provider. This field can usually be left blank unless your provider specifies a custom endpoint URL.

- API Key: Your unique API key. Replace with the key provided by your API provider.

- API RPM: The allowed requests per minute for your API. Replace with the appropriate value for your API plan.

-

After entering all the required information, click the '+' button to add the new model to LiteLLM.

Examples

Ollama API (from inside Docker):

Gemini API (MakerSuite/AI Studio):

Advanced configuration options not covered in the settings interface can be edited in the config.yaml file manually. For more information on the specific providers and advanced settings, consult the LiteLLM Providers Documentation.