Starting with OpenAI-Compatible Servers

Overview

Open WebUI isn't just for OpenAI/Ollama/Llama.cpp—you can connect any server that implements the OpenAI-compatible API, running locally or remotely. This is perfect if you want to run different language models, or if you already have a favorite backend or ecosystem.

Protocol-Oriented Design

Open WebUI is built around Standard Protocols. Instead of building specific modules for every individual AI provider (which leads to inconsistent behavior and configuration bloat), Open WebUI focuses on the OpenAI Chat Completions Protocol.

This means that while Open WebUI handles the interface and tools, it expects your backend to follow the universal Chat Completions standard.

- We Support Protocols: Any provider that follows the OpenAI Chat Completions standard (like Groq, OpenRouter, or LiteLLM) is natively supported.

- We Avoid Proprietary APIs: We do not implement provider-specific, non-standard APIs (such as OpenAI's stateful Responses API or Anthropic's native Messages API) to maintain a universal, maintainable codebase.

If you are using a provider that requires a proprietary API, we recommend using a proxy tool like LiteLLM or OpenRouter to bridge them to the standard OpenAI protocol supported by Open WebUI.

Popular Compatible Servers and Providers

There are many servers and tools that expose an OpenAI-compatible API. Pick whichever suits your workflow:

- Local Runners: Ollama, Llama.cpp, LM Studio, vLLM, LocalAI, Lemonade, Docker Model Runner.

- Cloud Providers: Groq, Mistral AI, Perplexity, MiniMax, DeepSeek, OpenRouter, LiteLLM.

- Major Model Ecosystems:

- Google Gemini: OpenAI Endpoint (requires a Gemini API key).

- Anthropic: While they primarily use a proprietary API, they offer a Chat Completions compatible endpoint for easier integration.

- Azure OpenAI: Enterprise-grade OpenAI hosting via Microsoft Azure.

Step 1: Connect Your Server to Open WebUI

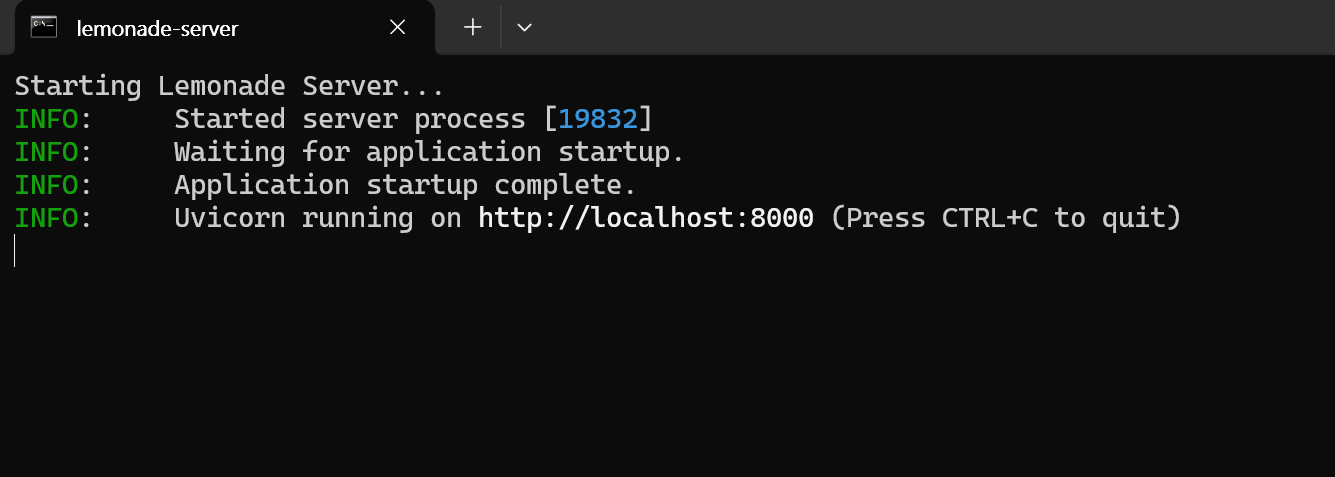

🍋 Get Started with Lemonade

Lemonade is a plug-and-play ONNX-based OpenAI-compatible server. Here’s how to try it on Windows:

-

Run

Lemonade_Server_Installer.exe -

Install and download a model using Lemonade’s installer

-

Once running, your API endpoint will be:

http://localhost:8000/api/v0

See their docs for details.

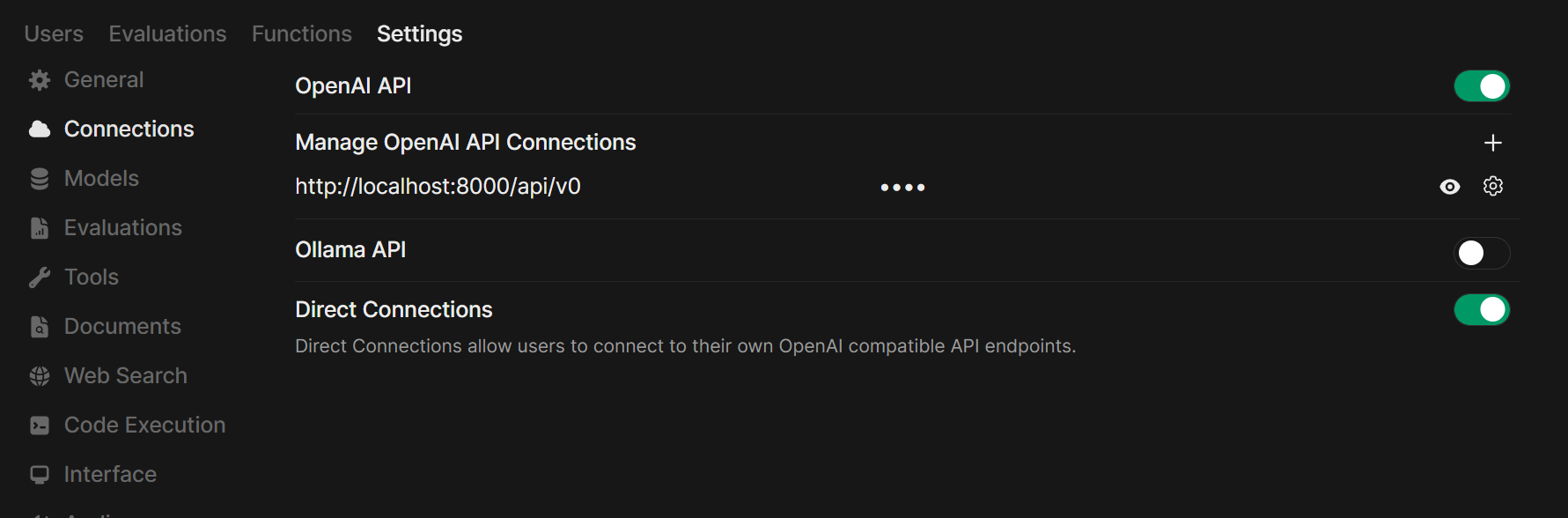

Step 2: Connect Your Server to Open WebUI

- Open Open WebUI in your browser.

- Go to ⚙️ Admin Settings → Connections → OpenAI.

- Click ➕ Add Connection.

- Select the Standard / Compatible tab (if available).

- Fill in the following:

- API URL: Use your server’s API endpoint.

- Examples:

http://localhost:11434/v1(Ollama),http://localhost:10000/v1(Llama.cpp).

- Examples:

- API Key: Leave blank unless the server requires one.

- API URL: Use your server’s API endpoint.

- Click Save.

If your local server is slow to start or you're connecting over a high-latency network, you can adjust the model list fetch timeout:

# Adjust timeout for slower connections (default is 10 seconds)

AIOHTTP_CLIENT_TIMEOUT_MODEL_LIST=5

If you've saved an unreachable URL and the UI becomes unresponsive, see the Model List Loading Issues troubleshooting guide for recovery options.

If running Open WebUI in Docker and your model server on your host machine, use http://host.docker.internal:<your-port>/v1.

For Lemonade: When adding Lemonade, use http://localhost:8000/api/v0 as the URL.

Required API Endpoints

To ensure full compatibility with Open WebUI, your server should implement the following OpenAI-standard endpoints:

| Endpoint | Method | Required? | Purpose |

|---|---|---|---|

/v1/models | GET | Yes | Used for model discovery and selecting models in the UI. |

/v1/chat/completions | POST | Yes | The core endpoint for chat, supporting streaming and parameters like temperature. |

/v1/embeddings | POST | No | Required if you want to use this provider for RAG (Retrieval Augmented Generation). |

/v1/audio/speech | POST | No | Required for Text-to-Speech (TTS) functionality. |

/v1/audio/transcriptions | POST | No | Required for Speech-to-Text (STT/Whisper) functionality. |

/v1/images/generations | POST | No | Required for Image Generation (DALL-E) functionality. |

Supported Parameters

Open WebUI passes standard OpenAI parameters such as temperature, top_p, max_tokens (or max_completion_tokens), stop, seed, and logit_bias. It also supports Tool Use (Function Calling) if your model and server support the tools and tool_choice parameters.

Step 3: Start Chatting!

Select your connected server’s model in the chat menu and get started!

That’s it! Whether you choose Llama.cpp, Ollama, LM Studio, or Lemonade, you can easily experiment and manage multiple model servers—all in Open WebUI.

🚀 Enjoy building your perfect local AI setup!