Gemini

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

Open WebUI also supports image generation through the Google AI Studio API also known as the Gemini API.

Initial Setup

- Obtain an API key from Google AI Studio - alternatively an API Key from Google Cloud and activate the

Generative Language APIfor the project. - You most likely need to create a project and enable the

Generative Language APIin addition to adding billing information, because the image generation API is not available for free.

If you are utilizing a free API key, it is vital to have a payment method on file. The absence of a valid payment method is a frequent cause of errors during the setup process.

Alternatively, if you are using Vertex AI, you can create an API key in Google Cloud instead of a service account. This key will function correctly, provided it is assigned the appropriate permissions. And given the Generative Language API is enabled for the project.

Configuring Open WebUI

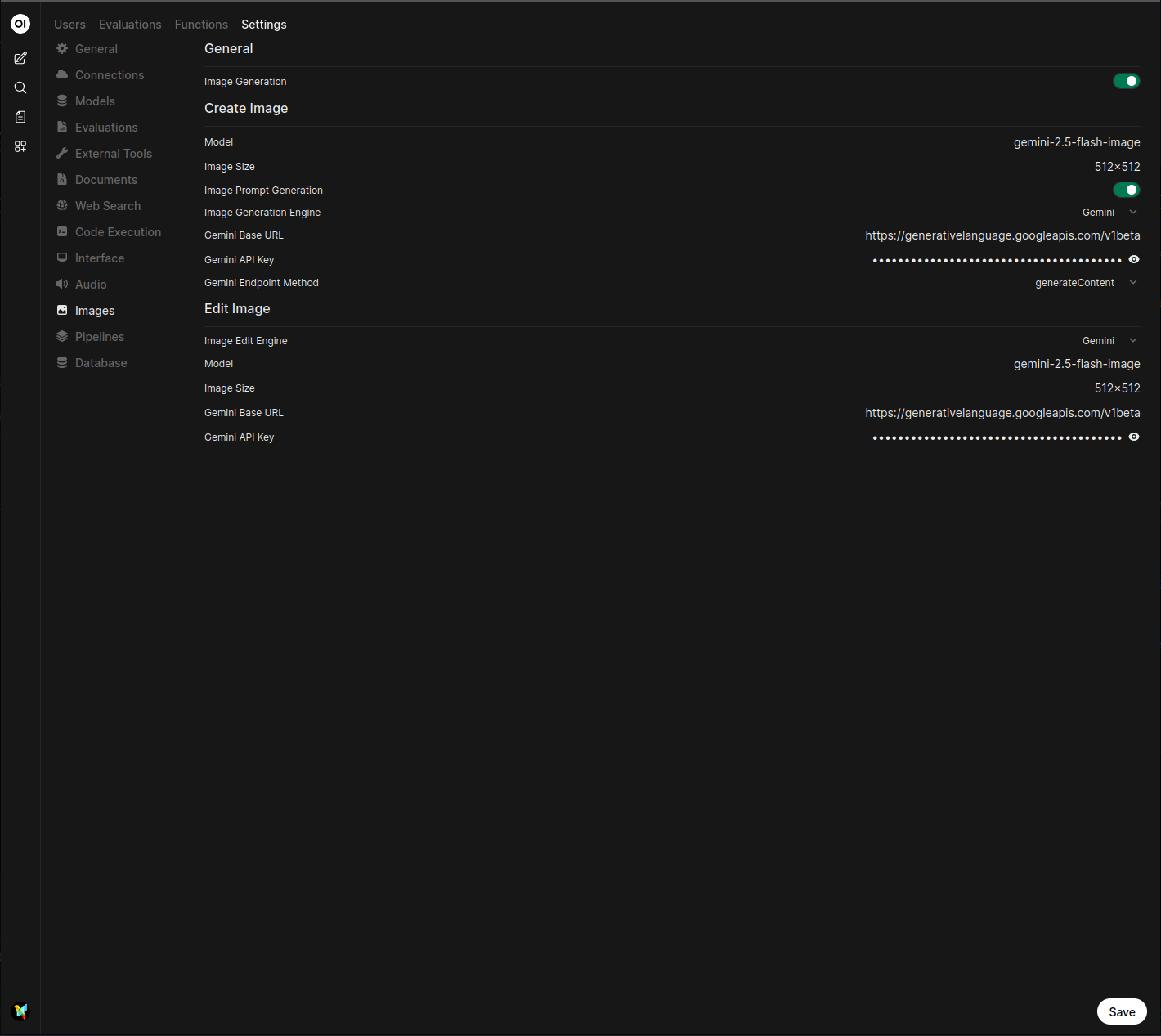

- In Open WebUI, navigate to the Admin Panel > Settings > Images menu.

- Set the

Image Generation Enginefield toGemini. - Set the

API Base URLtohttps://generativelanguage.googleapis.com/v1beta. - Enter your Google AI Studio API key.

- Enter the model you wish to use from these available models.

- Set the image size to one of the available image sizes.

Example Minimal Setup

- Gemini 3.0 Pro (aka Nano Banana Pro)

- Gemini 2.5 Flash (aka Nano Banana)

Create Image

- Create Image Model:

gemini-3-pro-image-preview - Image Size:

2816x1536 - Image Prompt Generation: on

- Image Generation Engine:

Gemini - Gemini Base URL:

https://generativelanguage.googleapis.com/v1beta - Gemini API Key: Enter your API Key

- Gemini Endpoint Method:

generateContent

Edit Image

- Image Edit Engine:

Gemini - Model:

gemini-3-pro-image-preview - Image Size: (can be left empty)

- Gemini Base URL:

https://generativelanguage.googleapis.com/v1beta - Gemini API Key: Enter your API Key

Create Image

- Create Image Model:

gemini-2.5-flash-image - Image Size:

2816x1536 - Image Prompt Generation: on

- Image Generation Engine:

Gemini - Gemini Base URL:

https://generativelanguage.googleapis.com/v1beta - Gemini API Key: Enter your API Key

- Gemini Endpoint Method:

generateContent

Edit Image

- Image Edit Engine:

Gemini - Model:

gemini-2.5-flash-image - Image Size: (can be left empty)

- Gemini Base URL:

https://generativelanguage.googleapis.com/v1beta - Gemini API Key: Enter your API Key

This feature appears to only work for models supported with this endpoint: https://generativelanguage.googleapis.com/v1beta/models/<MODEL_NAME>:predict.

This is the OpenAI BETA endpoint, which Google provides for experimental OpenAI compatibility.

Google Imagen models use this endpoint while Gemini models use a different endpoint ending with :generateContent

Imagen model endpoint example:

https://generativelanguage.googleapis.com/v1beta/models/imagen-4.0-generate-001:predict.- Documentation for Imagen models

Gemini model endpoint example:

https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent.- Documentation for Gemini models

Trying to call a Gemini model, such as gemini-3-pro-image-preview would result in an error due to the difference in supported endpoints for Image Generation.

400: [ERROR: models/gemini-3-pro-image-preview is not found for API version v1beta, or is not supported for predict. Call ListModels to see the list of available models and their supported methods.]

LiteLLM Proxy with Gemini Endpoints

Image generation with a LiteLLM proxy using Gemini or Imagen endpoints is supported with Open WebUI. Configure the Image Generation as follows:

- In Open WebUI, navigate to the Admin Panel > Settings > Images menu.

- Set the

Image Generation Enginefield toOpen AI. - Change the API endpoint URL to

https://<your-litellm-url>:<port>/v1. - Enter your LiteLLM API key.

- The API version can be left blank.

- Enter the image model name as it appears in your LiteLLM configuration.

- Set the image size to one of the available sizes for the selected model.

To find your LiteLLM connection information, navigate to the Admin Panel > Settings > Connections menu. Your connection information will be listed under the Gemini API connection.