Usage

Before you can use image generation, you must ensure that the Image Generation toggle is enabled in the Admin Panel > Settings > Images menu.

Using Image Generation

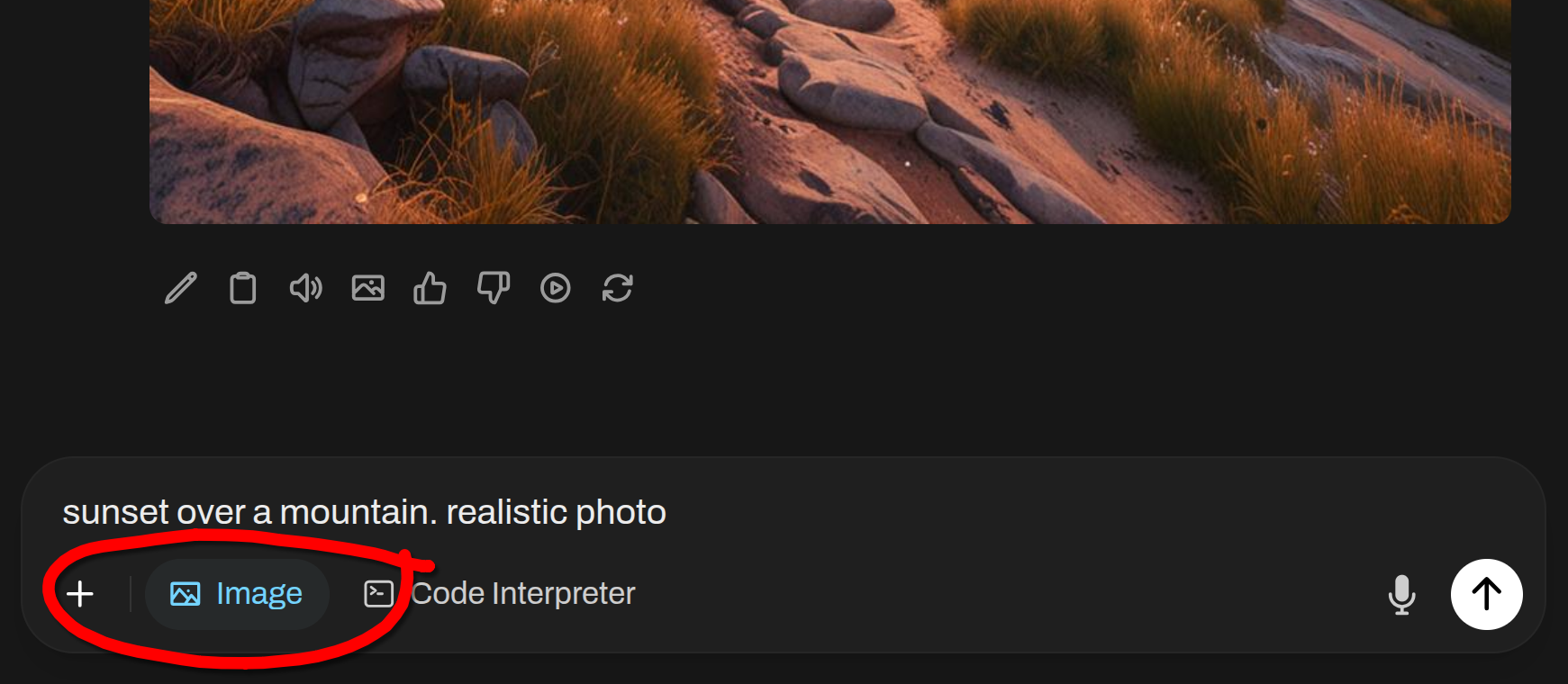

- Toggle the

Image Generationswitch to on. - Enter your image generation prompt.

- Click

Send.

Native Tool-Based Generation (Agentic)

If your model is configured with Native Function Calling (see the Central Tool Calling Guide), it can invoke image generation directly as a tool.

How it works:

- Requirement: The Image Generation feature must be toggled ON for the chat or model. This grants the model "permission" to use the tool.

- Natural Language: You can simply ask the model: "Generate an image of a cybernetic forest."

- Action: If Native Mode is active and the feature is enabled, the model will invoke the

generate_imagetool. - Display: The generated image is displayed directly in the chat interface.

- Editing: This also supports Image Editing (inpainting) via the

edit_imagetool (e.g., "Make the sky in this image red").

This approach allows the model to "reason" about the prompt before generating, or even generate multiple images as part of a complex request.

You can also edit the LLM's response and enter your image generation prompt as the message to send off for image generation instead of using the actual response provided by the LLM.

Legacy "Generate Image" Button: As of Open WebUI v0.7.0, the native "Generate Image" button (which allowed generating an image directly from a message's content) was removed. If you wish to restore this functionality, you can use the community-built Generate Image Action.

Restoring the "Generate Image" Button

If you prefer the workflow where you can click a button on any message to generate an image from its content, you can easily restore it:

- Visit the Generate Image Action on the Open WebUI Community site.

- Click Get to import it into your local instance (or copy the code and paste it into your local instance).

- Once imported, go to Workspace > Functions and ensure the Generate Image action is enabled.

This action adds a "Generate Image" icon to the message action bar, allowing you to generate images directly from LLM responses - which is helpful if you want the assistant to first iterate on the image prompt and generate it once you are satisfied.

Requirement: To use Image Editing or Image+Image Generation, you must have an Image Generation Model configured in the Admin Settings that supports these features (e.g., OpenAI DALL-E, or a ComfyUI/Automatic1111 model with appropriate inpainting/img2img capabilities).

Image Editing (Inpainting)

You can edit an image by providing the image and a text prompt directly in the chat.

- Upload an image to the chat.

- Enter a prompt describing the change you want to make (e.g., "Change the background to a sunset" or "Add a hat").

- The model will generate a new version of the image based on your prompt.

Image Compositing (Multi-Image Fusion)

Seamlessly combine multiple images into a single cohesive scene—a process professionally known as Image Compositing or Multi-Image Fusion. This allows you to merge elements from different sources (e.g., placing a subject from one image into the background of another) while harmonizing lighting, perspective, and style.

- Upload images to the chat (e.g., upload an image of a subject and an image of a background).

- Enter a prompt describing the desired composition (e.g., "Combine these images to show the cat sitting on the park bench, ensuring consistent lighting").

- The model will generate a new composite image that fuses the elements according to your instructions.